How we imported the Etymological lexicon of modern Breton from Wikisource into Wikidata lexicographical data

The Lexique étymologique du breton moderne (Etymological lexicon of modern Breton) is a dictionary, about the Breton language, written by Victor Henry and published in 1900. Starting in summer 2021, we imported, with Nicolas Vigneron, the content of the dictionary from Wikisource into Wikidata lexicographical structured data. Before the import, there were 283 Breton lexemes (≈ words) in Wikidata; after the import, there were more than 4,000.

This post is divided in three sections:

- Project: how we proceeded — this part doesn’t require any specific linguistics nor technical knowledge to be read;

- Breton: for people interested in the Breton language;

- Technical: for people interested in the technical side of the project.

Project

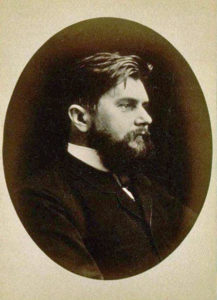

Victor Henry is a French philologist and linguist. He wrote several books, including the Lexique étymologique du breton moderne (Etymological lexicon of modern Breton), published in 1900, which is a dictionary, in French, about the Breton language. As Victor Henry died in 1907, more than 70 years ago, its work is in the public domain. The book is available on Wikisource, in plain text formatted with wikicode. With Nicolas Vigneron, we imported the content of the dictionary into Wikidata lexicographical data, in a structured format.

Parsing

The first step was to parse the content of the dictionary to transform it in a machine-readable format. It was an iterative process, with parsing rules defined, implemented and tested step by step. The results were regularly checked in order to:

- validate the rules,

- improve the proofreading of the book in Wikisource. Hundreds of fixes were made in the book on Wikisource during this phase, so this project benefited both to Wikidata and to Wikisource.

To help checking the data quality, several human-readable reports were created:

- obviously, the list of lexemes to be imported,

- a list of parsing errors (for instance, unrecognized lexical categories),

- letters frequencies (in our case, unigrams and bigrams).

Import

Several lexemes were already present in Wikidata. They were edited before the import to avoid creating duplicates. In our case, we ensured that each lexeme had a statement described by source (P1343) with the value Lexique étymologique du breton moderne (Q19216625) and a qualifier stated as (P1932) with the headword of the corresponding notice in the dictionary (example).

When importing data with a bot into Wikidata, it is mandatory to request a permission. It is a useful process to discuss the import with the community and to gather relevant feedback. In our case, the discussion took place on Wikidata and on the Telegram group dedicated to Wikidata lexicographical data. This step should be started in parallel with the parsing to take the feedback into account early in the development phase.

Once it was considered that the quality of the parsed data was good enough, first edits were made on Wikidata. It allowed to fix a few bugs and to finish the permission process.

The main import took place at the beginning of January 2022, with more than 3,700 lexemes created.

Fixes and enhancements

It was evaluated that it was too difficult to properly import several things, so the imported data is not perfect. There is no obvious errors, but there is still work to do, like senses and etymology. This work is of course collaborative. For instance, you can work on lexemes from the import that still don’t have any sense.

A first online workshop was organized in January 2022. It gathered 7 editors who were able to discover lexicographical data on Wikidata and to improve about 150 lexemes in Breton.

Tools

Several tools were used to facilitate the project, including:

- Etherpad, to have a single place to take notes.

- Wikimédia France’s instance of BigBlueButton, for meetings.

Breton language

Examples of imported lexemes:

Implemented rules

Here is described what was automatically imported from the dictionary:

- To avoid mismatches, all apostrophes are converted to vertical apostrophes.

- Each lexeme has:

- a language (Breton).

- a lemma, first one from the headword (values separated by commas).

- a lexical category (noun, verb, etc. full list).

- a reference to the Lexique étymologique du breton moderne, with:

- the page number of the entry,

- a link to the entry on Wikisource,

- the headword of the entry,

- the list of the forms.

- for nouns: a grammatical gender (feminine or masculine), depending on their lexical category.

- for verbs, a conjugation class (mostly regular Breton conjugation).

- forms, from the headword, and where it applies:

- a dialect (Cornouaille, Leon, Tregor, Vannes),

- grammatical features:

- for adjectives: positive,

- for nouns: number (singular or plural), depending on their lexical category,

- for verbs: infinitive.

- lexemes which lemma starts with a star are instance of reconstructed word.

Not implemented

Here is a list of things that were not automatically imported:

- Variants are not merged. For instance, krouilh needed to be manually merged into one lexeme after the import because it appears two times in the dictionary, as Kourouḷ and as Krouḷ.

- Senses are not created.

- Etymology is not filled.

Technical side

The import was made using PHP and Python scripts, divided in three parts:

- crawler.php: to crawl text from Wikisource and to clean useless text in our case.

- parser.py: to parse crawled text, transforming it in Wikibase format, and generate some reports.

- bot.py: to import generated data into Wikidata.

Crawling

Crawling Wikisource was done with a PHP script. You can use any tool or script language to do the same.

Parsing

Parsing was done with a Python script. At first, it was made with a PHP script, but it was then rewritten when it was decided to use Python for the import step.

Import

There are many possibilities to import data into Wikidata. In our case, we needed a tool which was able to create new lexemes. Here are some options that were considered:

- QuickStatements: can update existing lexemes, but not create new ones (T220985).

- OpenRefine: does not support lexemes (#2240).

- Wikidata Toolkit: supports lexemes (#437), but probably not the easiest tool to import data into Wikidata.

- : does not support lexemes (T189321), but can interact with Mediawiki API.

- WikidataIntegrator: lack of examples when reviewed.

- WikibaseIntegrator: fork of WikidataIntegrator, rewrite in progress.

- Example of usage: LexUtils.

- LexData: not updated for several years, multiple forks.

I eventually decided to use Pywikibot for the main import. It had several advantages:

- Well-maintained tool, well-known in the Wikimedia community.

- Easy calls to Mediawiki API, with the method

_simple_request(even though it is supposed to be private). - Supports bot passwords.

- Supports maxlag.

The drawback is that it does not support lexemes. It was necessary to create “by hand” the JSON sent to the Mediawiki API. In this regard, this post on Phabricator was really useful to understand some subtleties, like how to create new forms with the keyword add.

Some refinements were made using QuickStatements.

Source code

The source code is released under CC0 license (public domain dedication). It has already been reused by another project!

Photo by Antoine Meyer, public domain.

Envel Le Hir

Envel Le Hir